_NOPE_

Members-

Posts

2543 -

Joined

-

Days Won

7

Content Type

Profiles

Forums

Events

Store

Articles

Patch Notes

Everything posted by _NOPE_

-

Thanks! These have all been confirmed processed and moved to the processed folder manually!

-

So, if you get any more of those "failed to move from /processing" messages, just drop the filename here, and I'll verify that the zip file has been received, and then move the original WARC to the complete folder. Sometimes I just gotta clear out that queue.

-

All of those sound like temporary connection issues. Just go ahead and try again. I've cleared out all of the "Processing" files, and moved that last one to the complete pile! Thanks!

-

How are things running for you all? Any errors yet?

-

Also, please delete and do NOT use any of the previous versions, as they'll still keep spitting out "corrupted" files!

-

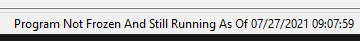

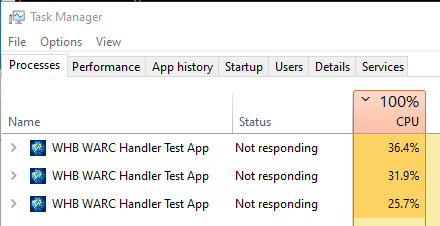

I've just pushed out version 2.0 of the COH WARC Processor to my site, so anyone that wishes to help with the project can get to Spelunking again! I'm sorry that we had to restart the project, but I was completely unaware of the aforementioned "chunking" that Archive.org did. This caused many MANY of the files to be corrupted and no good. However, after essentially reworking the ENTIRE process, and changing to a "byte by byte" reconstruction of the original files rather than using a StringBuilder and going line by line, I can now report a 100% success rate with several files, one of them over 4 GBs in size! Version 2.0 makes a couple of other changes: Since I'm going byte by byte on chunked data, and line by line on non-chunked data, there is no easy way for me to display any sort of "progress" on the individual WARC processing. So, the processing progress bar has been replaced with a notification TimeStamp and message in the main window stating how many files have been found within the WARC file thus far. This way, you know that the program is at least still running, as long as the TimeStamp is fairly recent: The "line by line" method actually was much slower and used fewer resources, so while users were able to run more instances, they didn't accomplish much very quickly. The new byte by byte process is faster, BUT consumes way more processing time. I don't suggest running more than two or three instances of the program at once, unless you have an amazing processor. Each instance took up about 33-41% of my processing power at any given time: I've updated the original post to reflect the updated program, and give a better introduction to the project to newbies. Thank you all for your efforts so far, and for any continuing efforts you wish to provide!

-

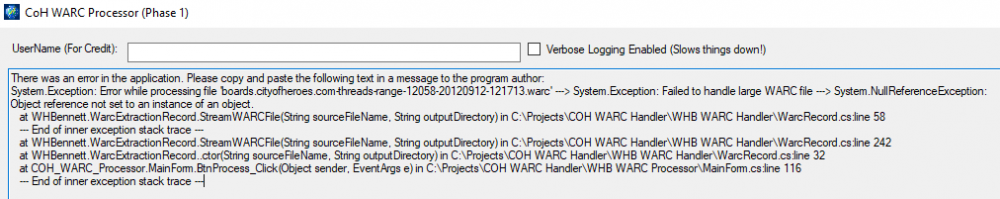

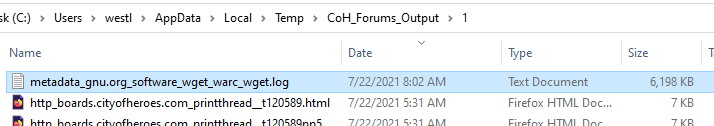

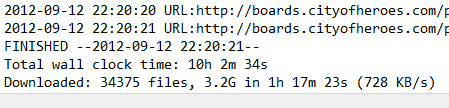

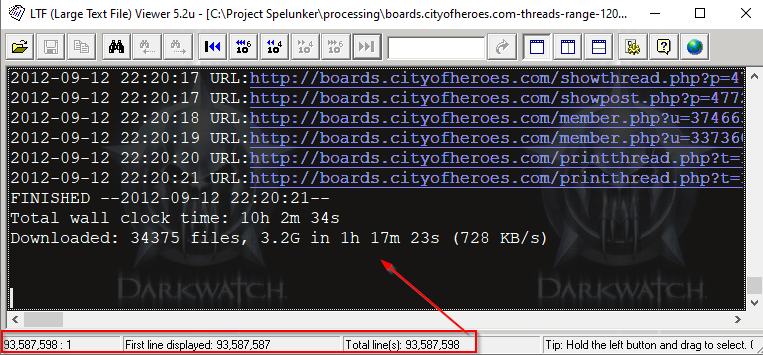

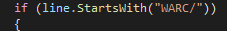

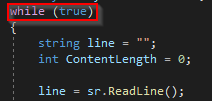

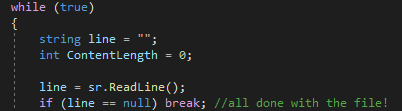

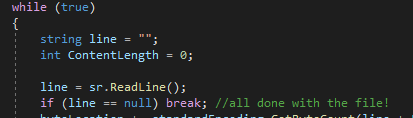

Alrighty, let's check on that 4GB test file that's been sitting processing for 3 days now... Uh-oh, THAT'S not good... Hmm.... I wonder what the last file that processed was... A wget.log file? Hmm.... sounds like something that the people at Archive.org might have made. Let's open it up and look at the end of the file, and see how far I got until it failed: Well... that LOOKS like it finished that file, so what was the problem? Time to dig into the source WARC by using my good old trusty Large File Viewer (http://diggfreeware.com/ltfviewr-great-free-large-text-viewer/), and take a look to see how far I got: Oh.... so it finished with the whole WARC file? So why did it fail? What line in my code failed? OHHHHHH...... if there is no line... the line will be NULL. If you try to use the "StartsWith" method on a null object, you'll get a null object reference. D'oh! Well that's no problem, I'm sure that I'm accounting for reaching the point where I can't ready any more data in my loop, right? Uh..... while (true)? But... wouldn't that just create an INFINITE LOOP???? What moron would write something like that???? Well... apparently me. So, I just add the following line: Now, to run the file one LAST time to just make sure that NOTHING goes wrong with the rest of the steps in the process (zipping up the files, uploading them, etc.)...

-

Update - I'm JUST about there and ready to release the next version of the processing program into the wild, just a couple of bugs I need to work out. I want to make sure I can process at least ONE of the largest files all the way through without a single error before I release it. I found two bugs, and I THINK I have them fixed, but you know, since I'm going byte by byte, which is a slow process, and the errors seem to be happening near the end of the file (because of COURSE they are), then it takes me a couple of days between when the process starts, and when I get to the error to try to troubleshoot it further. So that's why this has taken so long. Good news is, it looks like the Google route will probably work. As you can see here, Google is already indexing the first "imperfect" version of the output: https://www.google.com/search?q=site:cohforums.cityofplayers.com&ei=RxD4YOf4JMGDtQbG642gCg&start=200&sa=N&ved=2ahUKEwinuKKzkvTxAhXBQc0KHcZ1A6QQ8tMDegQIARA4&biw=1920&bih=927 Right now, just a bunch of user profiles, but once I get this new version out and can start making a sitemap of ALL of the files and submit that to Google, that should expedite the process substantially!

-

Here, LMGTFY... Have you checked the mentioned ports "59591" and "59581" on your local network and firewall to make sure that they are open ports? Because it appears that they are being blocked, sir or ma'am. I just tested it on my system, installing a brand new mod onto a brand new install... and it appeared to work fine, from what I can see.

-

Yeah, I know right? I mean what moron made this stupid thing???

-

VidiotMaps for Issue 24 and Beyond

_NOPE_ replied to Blondeshell's topic in Tools, Utilities & Downloads

Just delete your /data folder. All the Vidotmaps does is stick replacement files in that folder. If they doesn't work, then it sounds like it's time for a delete and reinstall. -

Also, my family is going on a week long vacation starting... tomorrow at 4:30am. So, needless to say, this whole thing is on pause for the next week. After that, I expect to get an updated version of the processor made and pushed out to everyone that wants to help.... again . 🤪

-

Bad News: Most likely we're going to have to re-run the whole project using this NEW method, because the old method left the files filled with chunk checksums, and broke many of the images because they were also chunked, and so the bit location was off on them. More info about what I had to deal with can be found in the link below, but in short - sometimes the data from the Internet Archive was recorded using Content-Length and grabbing all of it at once, and SOMETIMES it was recorded using "chunks", which you can read about more below: https://stackoverflow.com/a/32204608/7308469 The "chunked" stuff in the last run was all broken, because I didn't know anything about it! Now that I have it figured out, we can process the files correctly, albeit slower. wat is this i cant even

-

-

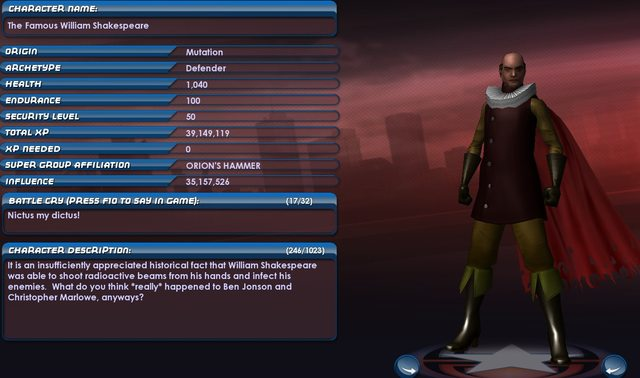

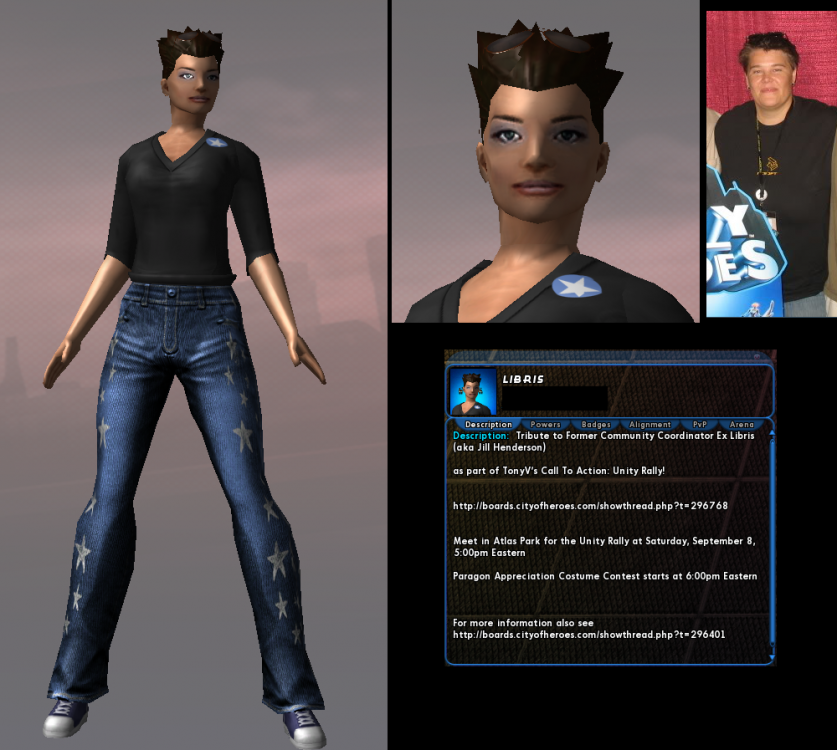

Here's some that I can recognize right away from the old forums... @Heraclea Starflier Backasswards Dragonberry The infamous Golden Girl @Christopher Robin @Oubliette_Red .... oh wait, they're still using that one! 😛 ... wasn't this one Memphis Bill's? I know I remember seeing this one! @Zekiran Immortal @Samuel Tow @Hyperstrike hasn't changed his either (not that I can point fingers either... heh) @Samuraiko also hasn't changed hers in all these years! Oh, and who could forget these things around the time Going Rogue came out: Oh, this is kind of a cool find:

-

The good news is that after many hours of banging my head on a concrete wall, I finally sussed out how to correctly process large WARC files with NO errors in the data content, which means that HTML files no longer have random checksum digits arbitrarily strewn throughout, and binary files (like images) now show up correctly. You MAY recognize a few of these...

-

<Inserting cryptic comment here:> You guys have NO idea just how happy I am to see this image: Good News and Bad News coming Soon™ Which do you want to hear first? 😄

-

Force Field (Cottage Rule Needs Not Apply)

_NOPE_ replied to Apparition's topic in Suggestions & Feedback

@OmegaOne I would LOVE to have to deal with the problem of FF being TOO good, for once. -

Force Field (Cottage Rule Needs Not Apply)

_NOPE_ replied to Apparition's topic in Suggestions & Feedback

That's fair. Again, I'm not a numbers guy. I'd leave it to the Devs to balance that. Precisely my thought, exactly. It may require new code/technology to be written, but it'd also open the door to other powers to have "dual purpose" like that. Target? "Trap" the target. No target? "Trap" yourself. -

Force Field (Cottage Rule Needs Not Apply)

_NOPE_ replied to Apparition's topic in Suggestions & Feedback

@plainguy I don't mean to toot my own horn, but I can't imagine any changes to what I said then. Those suggestions do the following things for the set: Merge redundant powers, Fortify and amplify underutilized powers, and Add new powers that match the "theme" of the set and add new tools to an FFer's toolbox. In my opinion, I think I nailed it. Now, the NUMBERS I can't speak to, I've never been a numbers guy. I'd trust the devs to figure out what those numbers need to be to make things balanced. But I think the intention is clear, and shows the necessary path forward to make FF more desirable again, especially at the higher levels. -

Oh, the files are there (mostly), if you want to BROWSE through all of them... 😉 : http://cohforums.cityofplayers.com/files/ From the first: http://cohforums.cityofplayers.com/files/boards.cityofheroes.com-threads-range-11140-20120904-045005/http_boards.cityofheroes.com_showthread__p1.html To the last (until the moment the Internet Archive took their snapshot on 09/07/2012: http://cohforums.cityofplayers.com/files/boards.cityofheroes.com-threads-range-29687-20120907-012111/http_boards.cityofheroes.com_showthread__t296879.html Until then, you might want to wait until I got a site map finished up, then Google will be able to search them. And I want to wait for that until I get those last ten HUGE files processed. Soon™

-

I mean, making a basic UI for it would be easy, and writing the code to translate between the UI and the final file format and vice-versa would be easy. Without having actually looked at the files though yet, my first guess for the biggest challenge would be how to obtain the data for every single option. My guess is that (as with everything else in CoX code it seems), the data would be stored in an obscure set of hierarchical files on the server. So we'd need the HC team to "volunteer" that... ...unless some player(s) want to go through the EXTREME effort of choosing EVERY possibility in the MA system and saving them to various mission files, then sending those files to the programmer to scour for the needed data. But..... YEESH, I'd hate to be a part of a project like THAT.

-

Solarverse's SFX Consolidated List of Mods

_NOPE_ replied to Solarverse's topic in Tools, Utilities & Downloads

If it exists in an old yuotube video, maybe you could isolate the sound? -

Solarverse's SFX Consolidated List of Mods

_NOPE_ replied to Solarverse's topic in Tools, Utilities & Downloads

Well, I mean, you COULD scour Leandro's original leak, they're probably still there...