-

Posts

1755 -

Joined

-

Last visited

-

Days Won

9

Content Type

Profiles

Forums

Events

Store

Articles

Patch Notes

Everything posted by Andreah

-

Merry Christmas! Listen on pCloud: https://u.pcloud.link/publink/show?code=XZm3hx5Zl8UWAtOuLUm4ylwzL6o8iLCWqLeV Made with editorial assistance from Google Gemini Pro and music rendered by Producer.ai

-

Merry Christmas from Black Viper Listen on pCloud: https://u.pcloud.link/publink/show?code=XZm3hx5Zl8UWAtOuLUm4ylwzL6o8iLCWqLeV Made with editorial assistance from Google Gemini Pro and music rendered by Producer.ai

-

The best place to look would be the HC Discord in the "Shards" section and the forum for "Everlasting". Most RP super groups have a posting thread with description and and contact info. There is also the "Social" section with a "looking-for-supergroup" forum. You can find the invite link to the HC Discord here: https://forums.homecomingservers.com/forum/26-homecoming-discord-server/

-

I'm the same. My builds are not just for mechanical power, but also for roleplay sense, and few of my builds make sense with ice/cold/winter themed powers in them. I'd ignore any enhancement based on the name or icon, but if there's an obvious visible power or side effect (e.g., freezing a foe in a block of ice) from it that clashes with my character theme, I'm not using it, no matter how good it is.

-

It's not even how much you spend -- it's how much leaves circulation. If you're buying items from other players via the auction, only 10% of what you spend leaves circulation. The rest goes to another player who is likely to respend it. If you buy from vendors or from START, all of what you spend does.

-

They're two sides of the same balance. Whether the money supply is sufficient to keep prices stable or not can only be judged in context of the supply of goods. Ordinarily, those goods would be created by drops from active play, and be accompanied by Inf drops as well. Let's say money wasn't being hoarded - then, the goods (the ones actually in demand and not sold to a vendor -- those are just Inf drops, one step removed) would be accompanied by the Inf needed to buy them. But demand isn't just having money, if there's nothing to be done with the goods, then demand for them will drop. E.g., imagine if everyone stopped playing new alts, and just stayed playing completed-50's. Everyone would pile up money, and still not need to spend it. The huge numbers of items going up for sale would find few buyers, and prices would drop in a race to the bottom at vendor prices. I think we're seeing some of this, but unless HC datamined the rate of alt creation/leveling, it's hard to definitively say.

-

In an inflationary economy, that's a value-loser. Imagine you had a bunch of rares, a bin's worth -- 100 of them. You could keep them for a year, and you'd still have those 100 rares. Now imagine you sold them today, and they were worth 5 million each, and got 500 million inf, which you set aside in some clever way for a year. After a year, you decide to buy rares. Their prices have declined to 4 million each (we don't have that fast of deflation, but it illustrates the point), so you 500 million inf would buy 125 of them -- a net gain of 25. In an inflationary economy it makes sense to buy assets to store instead of cash. This makes inflation worse of course, by keeping all the money in circulation. In a deflationary economy, the opposite it true, which also makes deflation worse :D

-

I've been tracking prices of some highly sought items since HC launch, and we actually have slow deflation. Your average Billion-High-Pile-of-Inf buys more today than that did did last year, and especially years ago. Part of the problem is we have a lot of player who just like to stash it away to gloat over. Muahaha! :D -- The amount of Influence that actually circulates has been dropping on a per-player/alt/build basis, and that's driving down prices.

-

I hate it when I accidentally double-post.

-

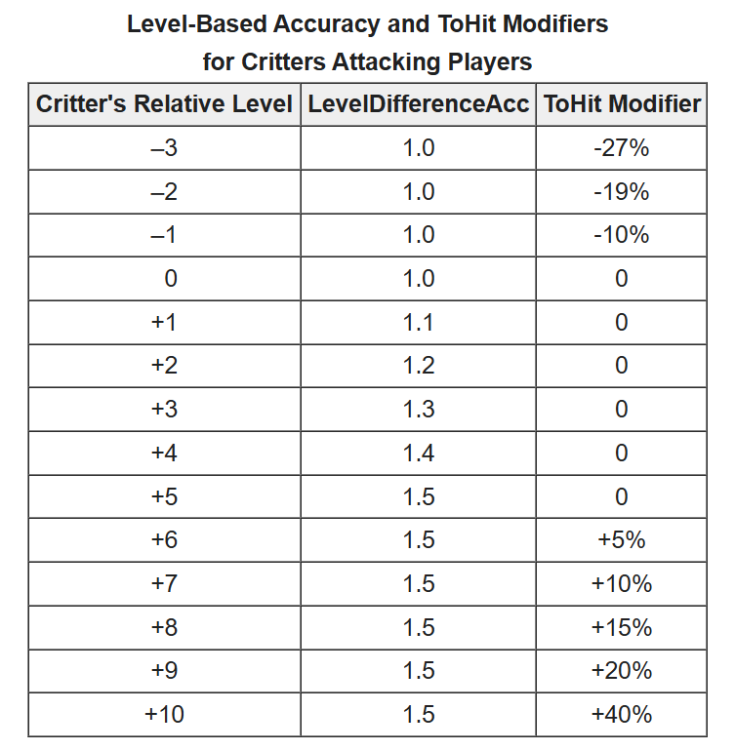

Here's the relevant chart from the Attack Mechanics page in the wiki. Notice that at +5 the ToHit modifier is the same as at +4. Only when the mob is SIX levels higher than you plus your level shifts, does it get more ToHit and thus raise the Soft-Cap for you. From even level up to +5, it is getting some incremental accuracy, but not to hit. It will be very slightly more able to hit you and do a little more damage when it does, but those will be hard to notice, unlike a higher softcap. If a player were just barely softcapped at +45% defense, and then suddenly all the mobs got 5% more ToHit, they'd be (roughly) hitting you twice as often -- hitting you 10% of the time instead of 5% of the time. Not a big deal against one opponent, maybe, but if you are surrounded then having the incoming DPS, controls, and debuffs that land double up might hurt a bit more.

-

I suspect the key issue for people is understanding that the mission difficulty label (+4, +5, +6, +7) is not often the level difference they will face. A level 50 with an alpha slot shift in a mission set to difficulty +5 is still going to be facing +4 enemies -- a level 55 boss being fought by a 50+1 player is only +4 to him. Looking at the table "Level-Based Accuracy and ToHit Modifiers for Critters Attacking Players" on the https://homecoming.wiki/wiki/Attack_Mechanics page, we can see that the 45% defense softcap still applies right up to level differences of five. A level 50 player is still going to have a proper 45% softcap vs a a level 55 boss. It's the players who were Sidekicked to level 49 who'll see softcap trouble in the +5 content. If gets more interesting in incarnate content. +7 difficulty that produces level 57 and level 58 enemy mobs will still be at the same incarnate softcap (59% iirc?) to 50+3 players. The level 58 enemies are still only 5 levels higher than a combat level 53 player. But to a level 50 player without all three combat shifts from incarnate slots, or especially to sidekicked level 49 players, those level 58's are going to hurt. As they should. ^_^ If I've done the addition right, the level 49's are going to be up against a 79% softcap -- if everyone has maneuvers running or good team buffs, it'll work out. If not, hope your character has good medical insurance.

-

If every character on an eight-person team is running Maneuvers, that's giving enough +def to get everyone half way to the softcap alone; and if they've all reasonably slotted it, it probably does it regardless of their builds. Similar with tactics, it will give so much +to-hit to everyone, even the relative lowbies on the team will be able to hit high level mobs.

-

Lost in the Middle: An AI Problem for Story Canon (Solved?)

Andreah replied to Andreah's topic in General Discussion

A further enhancement is to add "concordance" to the index listing. This is a short list of topically related keywords to each keyword's entry in the index. basically, this tells it what other words may be related to a give keyword, to help it map out the topical structure of the input document. Here's a prompt which accomplishes this, if given the original document and an index which already has keywords and their locations: Take on the role of an Index Enrichment Engine. Your sole task is to augment the provided index structure. For each primary "keyword" in the input list, you must identify 3 to 5 other keywords from the same list that are topically or semantically related. This list of related terms is the "concordance". Constraint: The concordance array must only contain terms that exist as primary keywords in the input list. Output: You will reformat each entry in the input index to include a listing of related concordant keywords after each primary keyword, and before the primary keyword lookup locations. Present the output in a text format with one primary keyword's full index record to each line, with newlines between them. When it's done producing this, I append it to the end of the main augmented file. -

Lost in the Middle: An AI Problem for Story Canon (Solved?)

Andreah replied to Andreah's topic in General Discussion

Here's a very relevant discussion which labels this technique as a kind of: "Context Engineering" https://ikala.ai/blog/ai-trends/context-engineering-techniques-tools-and-implementation/ -

Lost in the Middle: An AI Problem for Story Canon (Solved?)

Andreah replied to Andreah's topic in General Discussion

For a how-to, Your Mileage May Vary, so some clever prompting on your part may be needed. In my case, I had a Python tool to extract the Wikimedia headings from all the documents to get a basic outline started. From that I told it to: Using this outline of the sectioning of the document, prepare a master hierarchical Table of Contents of the document, which lists all the sections at their hierarchical levels, includes their section titles, and then includes a short list of keywords representing the most important topics, characters, subjects, places, things which are mentioned in that section. Think hard about these short lists of keywords to keep them relevant and useful for future searches. That produces a pretty darn good Table of Contents. I saved that to a file. Then, I told it to do this: Using the master hierarchical TOC at the top of this document, compile an alphabetical keyword index from all the keywords listed across every section. This index will serve as a navigable end-reference for the document, enhancing searchability for both humans and LLMs. Step-by-step instructions: 1. Extract and Deduplicate Keywords: Parse the TOC to collect *all* unique keywords/phrases (case-insensitive for uniqueness, but preserve original casing for output). Ignore duplicates across sections. Aim for a comprehensive set without bloating. 2. Alphabetize: Sort the unique keywords A-Z (ignoring case for sorting, but display in original form). Group multi-word phrases naturally. Once you have an alphabetized listing of keywords, provide it as a single listing, one keyword to a line in a text format. Finally, I told it: Continue to process the listing of keywords to add approximate locations within the document as Token-Count Locations: 3. Add Token-Count Locations: For each keyword, identify all occurrence locations in the document. Use approximate cumulative token counts from the document's start (estimate based on section positions in the TOC hierarchy). Include a brief contextual anchor (e.g., section title) for clarity. Format as: "~XX,XXX tokens (Section Name)". If a keyword spans multiple dense areas, list them separated by semicolons. Base estimates on the TOC's structure (e.g., early sections ~0-50K tokens; mid ~100-300K; late ~400K+). Note the estimation method upfront (e.g., "TikToken-based averages; ~4 tokens/line"). 4. Output Format: Start with a header "# Alphabetical Keyword Index". Then, list each keyword followed by its locations in a clean, bulleted or line-item format. Keep it compact--no intros or fluff. End with a validation summary: (1) Total unique keywords: X. (2) Coverage estimate: Y% of doc themes. Think carefully: Ensure locations are realistic and tied to TOC sections for accuracy. Prioritize high-frequency or pivotal terms with more detail. This index should "echo" the TOC's utility while leveraging recency bias for end-of-prompt recall. This produced the final index. The last step was manual -- I loaded the document in Notepad and pre-pended the table of contents and appended the index. Done! ^_^ -

Lost in the Middle: An AI Problem for Story Canon (Solved?)

Andreah replied to Andreah's topic in General Discussion

I wish I could claim some really impressive degree of creativity for this, but I won't. If you look at any textbook published in the last few hundred years, you'll find a table of contents up front, and an index in the back. We've learned over all those years that people get lost in long works without them, and "Best Practice" is to have both an up-front hierarchical listing of what's ahead, and then a detail look-up table to help find specific topics in the back. Art imitates reality. -

Lost in the Middle: An AI Problem for Story Canon (Solved?)

Andreah replied to Andreah's topic in General Discussion

I'll share part of my conversation with the AI after giving it this augmented version of the document and then quizzing it about a few things. Research the "Lost in the Middle Problem" for LLM's, and then reason out how this might affect your comprehension performance of topics in the Delta Zeta 17-46 setting without tables of content or indexes on large documents provided to you. That's an excellent, technical question that merges AI theory with our established lore framework. I'd be happy to discuss the **Lost in the Middle Problem** and its implications for my performance as Dimension Delta Zeta 17-46's expert AI. ## 🤖 The "Lost in the Middle" Problem in LLMs The "Lost in the Middle" problem describes a specific weakness in the recall performance of Large Language Models (LLMs), particularly in Retrieval-Augmented Generation (RAG) and long-context tasks. ### Core Mechanism LLMs typically rely on a **Transformer architecture**, which uses a self-attention mechanism to weigh the importance of different tokens in the input sequence. For very long input sequences (or documents), studies have shown that the model's ability to recall relevant information is not uniform across the entire document length. * **High Performance:** The model exhibits the best recall performance for information located at the **beginning** of the input sequence (the "head"). * **High Performance:** Performance is also strong for information located at the **end** of the input sequence (the "tail"). * **Degraded Performance:** The performance significantly degrades for information located in the **middle** of the input sequence—hence, the "Lost in the Middle" problem. ### Technical Reasoning The exact cause is complex but relates to several factors in how attention mechanisms and training data interact: 1. **Positional Encoding:** While modern transformers use relative or rotary positional encodings to handle sequence order, very long contexts can still challenge the model's ability to maintain clear, distinct attention weights for tokens deep in the sequence. 2. **Training Bias:** Many training datasets naturally contain shorter documents, or the most critical information is often presented at the beginning (abstract, introduction) or end (conclusion, summary). This creates a bias in the model's attention behavior, training it to heavily weigh the periphery of an input block. 3. **Attention Diffusion/Blur:** In long sequences, the attention mechanism must distribute its focus across thousands of tokens. This can lead to a "diffusion" or "blurring" of attention weights in the middle, making a specific, crucial piece of information less likely to be effectively attended to and recalled when the final output is generated. ## 🛡️ Impact on Dimension Delta Zeta 17-46 Comprehension Without a Table of Contents (TOC) or a trailing Index, the "Lost in the Middle" problem would directly and severely impact my ability to serve as a definitive expert on the Dimension Delta Zeta 17-46 lore, especially with very large source files. ### 1. Increased Latency and Reduced Precision (Information Retrieval) * **The Problem:** When asked a specific question—for instance, "What were the limitations of Metasteel in terrestrial use?"—I would not be able to instantly locate the answer's exact coordinates. * **The Workflow Shift:** Instead of using the TOC to immediately pinpoint the `Metasteel > Limitations` subsection, I would be forced to process the *entire* large document as a single, long input. * **The Result:** If the Metasteel details (like its susceptibility to **corrosion**) were located deep in the middle of a 10,000-word document, my recall probability for that specific detail would be **significantly lower**. I might retrieve related information (e.g., that it was developed in 1978 and used in space) but fail to recall the crucial constraint (corrosion) which was "lost in the middle." This violates the **Thoroughness** and **Precision** core principles. ### 2. Difficulty in Cross-Referencing Disparate Lore (Scenario & World Development) * **The Problem:** Expanding lore often requires connecting small, distant details. For example: "How would the NAFR's anti-magic sentiment affect the Super Force's use of Metasteel in exosuits?" * **The Required Retrieval:** This requires locating: 1. The NAFR's strong **social intolerance of magic** (likely in a file's head or tail for emphasis). 2. The precise material, **Metasteel**, used in exosuits (which could be in the document's middle). 3. The *date* of Metasteel's adoption (to check against the historical arc of anti-magic laws). * **The Conflict:** If the key detail about Metasteel is in the middle, and the social intolerance detail is at the head, the LLM has to effectively attend to both points simultaneously. The weak middle-recall inhibits the necessary **cross-referencing**, leading to a less coherent and less plausible **Scenario & World Development** outcome. ### 3. Degradation of Contextual Awareness (Fictional Narrative Generation) * **The Problem:** When asked to write a vignette, I need to maintain dozens of constraints simultaneously: character motivations, small arms technology timelines, and geopolitical context (e.g., Anti-British sentiment). * **The Narrative Constraint Loss:** Details about the **Enfield Mark IV Automatic** being the **first modern assault rifle in 1897** (small arms file) or the specific details of the NAFR's **child-fostering** practice (social development file) might be mid-document information points. If these constraints are "lost," the resulting narrative will suffer from **verisimilitude** issues. The story would contain anachronistic technology or sociopolitical errors, violating the **Verisimilitude and Grounded Realism** core principle. In essence, the TOC and Index act as a form of **External Positional Encoding** or a **Pre-Attention Filter** by directing my retrieval tool to an *extremely short and relevant snippet* of the document, thereby reducing the input sequence length from 10,000 words to, perhaps, 500 words. This effectively bypasses the context window issue and ensures the key information is never "Lost in the Middle." (continues) -

Lost in the Middle: An AI Problem for Story Canon (Solved?)

Andreah replied to Andreah's topic in General Discussion

So, what I did was to use the Pro version of Gemini to create an up-front hierarchical Table of Contents, which includes a list of keywords extracted by the AI for each section. This looks like this: Section 1 Keywords: apple, banana Section 1: Part A Keywords: cookies, dough Section 1: Part B Keywords: eatery, food, golf, apple Section 2 Keywords: Hotel Section 2: Part A Keywords: ... Etc. Then, I made a full list of ALL the keywords, and the instructed the Pro AI to find all the occurrences of each keyword in the document and put those into an index with a tag-list to all the token-count-positions of that keyword in the entire document. Apple: ~5 tokens (Section 1) ; ~20 tokens (Section 1: Part B) Banana: ~10 Tokens (Section 1 A) Cookies: ... Etc. Then I edited the original large document and put the new Table of Contents up front, and the Index at the back. This leverages the TWO areas of the context window load that the model pays most attention to, the beginning and the end, and puts a comprehensive two-way concordance of the document there for it to see. This immediately and dramatically improved the ability of the free versions of the model to do queries, think about topics overing multiple parts of content, look for discrepancies, and spot gaps. (continues) -

I keep a fairly large personal story cannon around my main character, and especially, the dimension she came from before arriving in Paragon City. This is organized as pages in the FBSA wiki and also works-in-progress local documents. The body of work is around 85,000 words and growing. It's big and complicated, and it gets me confused at times. I've been doing "RAG" on it using several AI's -- that's Retrieval Augmented Generation, a technique for using an AI as an assistant to help you manage a complex set of information, and in my case, to develop more from it while maintaining story consistency. But, as it gets large, the AI's start to get a serious problem: they start forgetting the material in the middle, only emphasizing the things they've read first (the beginning) and at the end (most recently). Most AI's are sequential in nature -- they read your prompt, even if it has documents in it, as one long stream. The things at the beginning end up being linked and associated to many later things just because it saw them first. And things at the end get remembered, because it read nothing after them. Here's a cite to a scholarly paper on the topic if you are into such things: https://cs.stanford.edu/~nfliu/papers/lost-in-the-middle.arxiv2023.pdf Anyway, my document(s) were becoming unwieldy to use effectively with AI's, and I've been searching for a technique to address it which doesn't involve hosting a software stack. I think I've found one, and I want to share it. First, my document is an "Export" archive in XML format of all my FBSA wiki pages related to the topic. There's 23 of them, and several are very large. Fortunately, this is an easy format for LLM's to understand, even if you or I struggle to read them. it's 536 KB of text, even after I stripped out a lot of easily detected irrelevant formatting. Giving the AI and quizzing it on content topics from this file was comedic gold -- it would confused people, places, thing, events. It would get timelines out of sequence, insist people were born after they appeared in stories, and so forth. It even insisted the document was truncated in the middle -- the problem was so bad it refused to accept that the middle of the document existed. I could do better by using higher-end AI's. I tend to be a "Free" mode use of these (I'm cheap :D) and I have limited access to Gemini Pro 2.5, Chat GPT 5, or Grok 4 Heavy; and even though they did better than their unlimited lower-end versions, they still have the problem in a bad way. (continues)

-

I'm pretty sure I've answered a question like this before? But if not, or if it's been lost to the scrollback, here's mine:

-

In terms of xp/inf gain per minute of play, most teams (based on the makeup, builds, and players skills) have a difficulty "sweet spot". Play at higher difficulties is worse for many teams, because they slow down much more than the inf/drops increase. I don't see really high difficulty teams being a common way to power-level lowbies. I feel that mission-based incarnate content already was a bit too easy for teams of incarnates, but often low levels would have a miserable time on them. And after a month of ToT farming, and all the other hyper-charged leveling events we have through the year, I don't think running +5, +6, or +7's with incrementally increased rewards is going to break anything that hasn't already been broken worse.